Design Concepts

OE Design Approach

The Cloud Native paradigm

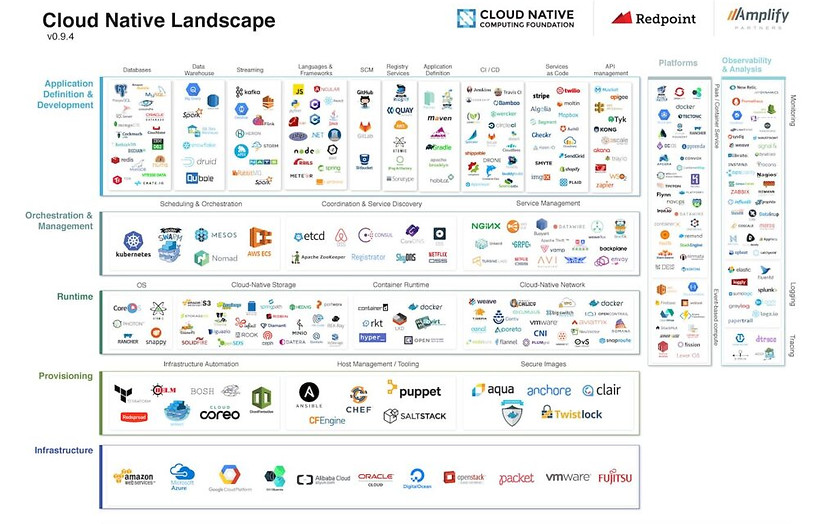

The software infrastructure that supports the integration and operation of consumer energy systems across data centres across the globe, must be inherently distributed, and operate in different Cloud environments operated by multiple host organisations. These requirements maps closely to the new domain of Cloud Native technologies that have been designed specifically to operate in Cloud environments. Whether in managed environments (e.g. Azure, GCP, AWS etc), in private Clouds, or as frameworks that span multiple clouds, building new applications in line with Cloud Native principles in some manner is non-negotiable.

The challenge with Cloud Native however, is its size and complexity. It can run anything and everything, and run it everywhere – which is extremely powerful on one hand, but can be also dauntingly complex on the other. To architect systems in this new paradigm, you must constantly weigh up these two trade-offs of power and complexity to choose the right frameworks whilst making them simple and reliable enough to manage in production environments.

Architectural separation

Perhaps the other main mindset change required in Cloud Native territory is that everything is programmable, and therefore can be fully automated. What was previous handled through manual configuration – network routing, firewalls, load balancers, security policies, server deployments, infrastructure provision, performance monitoring and scaling – is now part of the code deployment. Sometimes referred to as “infrastructure as code”, it is immensely powerful, but requires up-front work on the initial architecture and infrastructure frameworks to make the right technology choices, and to integrate them all together.

Consequently, the openElectric design defines an underlying infrastructure layer for deploying compute, data and traffic management resources, as well as to manage the associated scale, resilience and security concerns. It also provides an API abstraction layer for infrastructure services for the application developers that can tame some of the complexity.

Built upon this infrastructure layer is a second layer that defines the specifics of electricity domain model and data structures and computations required for energy delivery services, and is tailored purely for the domain of digital electrification.

1. Infrastructure layer

In the Cloud Native universe, there are hundreds of Cloud infrastructure services that provide developer, deployment and runtime frameworks for compute and data processing, storage, eventing, traffic management, security and monitoring and so on. As a vast majority of these are existing open source frameworks, choices need to be made

there are a few fundamental characteristics that each of these frameworks must meet;

-

A robust security-first, zero trust, dynamically configurable, changed managed cyber-resilient architecture. For many frameworks that means that security permeates all aspects of the architecture.

-

Designed to operate in and across all Cloud platforms, global supply chains, data centres. and hosts, and across public and private networks. In the Cloud native sense, that also includes configurable "data planes" or layers that allow data to be securely routed between organisations.

-

Defined declaratively wherever possible as reusable infrastructure components and images, independent of the application layer microservices.

-

Designed to be dynamically configurable and programmable for control plane and data plane automation applications and user interfaces.

The important characteristic of this infrastructure is that shares almost complete commonality with other sectors, and nothing in this layer should be customised for the electricity sector unless than where it is absolutely necessary. This makes the infrastructure easier to keep in line with other sectors and to leverage their open-source workforce.

2. Domain level foundations and services

Built on top of the infrastructure layer, a number of electrification domain microservices are defined that express several integral data services and associated read/write operations, which supports a number of foundational use cases for operational reliability, security and functionally compliant DER systems.

-

The foundation raw data formats for real time telemetry streaming and standing data from IoT resources and applications, distribution networks and energy markets

-

Protocol adapters to DER/IoT/networks/markets communication standards used widely across industry, such as OpenADR, IEEE2030.5, OCPP, OCPI and so on.

Design of the domain layer is harder, as it requires some forward knowledge of the multitude of use cases in use today and in the future, and across multiple data and communications standards.

Cloud Native technology

Cloud Native technology stacks have emerged in the last five years as a fundamental pillar of modern enterprise architectures. Originating from the need to address the challenges of scalability, resilience, and efficiency in cloud environments, Cloud Native technologies have been at the epicentre of continuous deployment practices adopted now by nearly all of the major tech giants. The concept traces its roots to containerisation technologies, such as Docker, and has extended into orchestration platforms like Kubernetes and service mesh frameworks like Istio. They are widely used by over half of the global banks and telco companies worldwide, and seen as the gold standard for enterprise multi-language (polyglot) architectures.

Cloud Native stacks are used extensively in the openElectric architectures, providing modularity and portability to Cloud hosting environments, and allows the adoption of hundreds of related containers, operational tools and development frameworks.

Zero Trust principles

Zero Trust security principles have gained significant adoption in cybersecurity circles, especially in critical infrastructure sectors like defence, energy and aviation. Traditional security strategies focused on perimeter defences, assuming that internal networks were trustworthy. However, with the rise in sophisticated cyber threats and widespread adoption of cloud computing, the Zero Trust philosophy using the starting point that no user or device within or outside the network is inherently trustworthy.

The result of this posture is the principle of “never trust, always verify” . This emphasises a focus on rigorous authentication, authorisation, and continuous monitoring at every level, ensuring that access is granted only on a need-to-know and time limited basis. This type of strategy works well for critical infrastructure projects as it mitigates the impact – or the “blast radius” – of an incursion, and creates more cyber resilient systems.

The openElectric security model defines authorisation policies that dynamically bind data relationships between between certain services, applications and IoT resources, creating micro-scale private networks as a key pillar of the continuous authorisation approach.

Microservices and Service Mesh

Microservices are the latest incarnation of distributed service based architectures (or SOA) that has been around since the late 1990’s. Whilst in theory they were superior and more flexible than simple monolith server applications, in practice they were clumsy, slower, more fragile and more complex to manage, in part due to the fact they were unable to configure or control the data traffic moving between services.

Service Mesh technology is the new software network infrastructure layer that handles communication between services in a distributed system. The predecessor to the Service Mesh is traffic routing, load balancing and VPN software running inside network hardware. The advantages of Service Mesh is that networking (L2-L7) capabilities are now fully separate from the hardware, and are consequently configurable and deployable as code as part of any new service, application or infrastructure.

The openElectric reference model is centred around the use of microservices, operating across multiple Cloud data centres and networks, secured by service to service authentication, and sufficiently flexible to dynamically discover, bind and route to other services running across the Cloud ecosystem.